This article is an optimized transcription of an interview with our engineer on Amazon Elastic Cache Serverless. It provides comprehensive answers about this service, why it is needed, and when it is best to be used. You can also watch this interview on our YouTube channel. Happy reading.

Q: Hello everyone! This is ITSyndicate in touch. We are a leading provider of DevOps services with more than a decade of experience in the field. Our team specializes in providing effective DevOps solutions. Specifically, we help optimize and improve infrastructures, ensuring their scalability, reliability, and security. Today, we will have the opportunity to talk with our DevOps engineer about the Amazon Elastic Cache Serverless. This new product from Amazon has attracted much attention among developers and companies working in the cloud environment.

Ivan, this is our engineer. Hi, I hope you are already tuned in for our exciting and productive conversation, as the topic is fresh and relevant. Please tell us how things are generally, and could you tell us more about yourself?

A: Of course, greeting. As you said, my name is Ivan. I work specifically in WebOps, integrating new cloud services into our clients' projects. I find the freshest and most exciting cloud innovations and investigate how they can help save time and money for our clients. If I find new futures useful, I implement them into the project and take care of them.

Q: Thank you for the introduction. Can you tell me more about Amazon Elastic Cache Serverless and why its launch is so significant for developers and companies working in the cloud?

A: We can start by explaining Elastic Cache. It's a service from Amazon that gives users access to deploy engines like Redis and Memcache. Amazon takes care of it and allows you to manage a service that will spin up these engines for you. What does the Serverless prefix mean? It means you don't manage these servers. You don't have to worry about their availability, whether they are working incorrectly, or their updates. Amazon manages this serverse and scale independently depending on how many resources you have. It could be 1 GB now, and in 10 minutes, it could be 2, 4, 8, etc.

It could be attractive for developers and those using this service because it can help save money and time and optimize resources. As a bonus, using serverless service reduces greenhouse gases, which is seemingly unrelated, but CO2 will be reduced. For additional emissions, Amazon also charges a fee or, so to speak, a penalty. So this can also be optimized using a Serverless solution.

Q: Cool. I couldn't even imagine that such a tool could be related to environmental protection. For me, actually, this is a revelation. Thank you.

Please tell me what differentiates Elastic Cache Serverless from the standard Elastic Cache offerings.

A: First of all, they are different services. It works differently. Amazon uses a different infrastructure for the Serverless solution.

So, with a regular Elastic Cache, you are allocated specific nodes and servers that cannot scale, and you have them allocated; that's it. You can't do anything with them. After some time, you can update and increase or decrease their capacity.

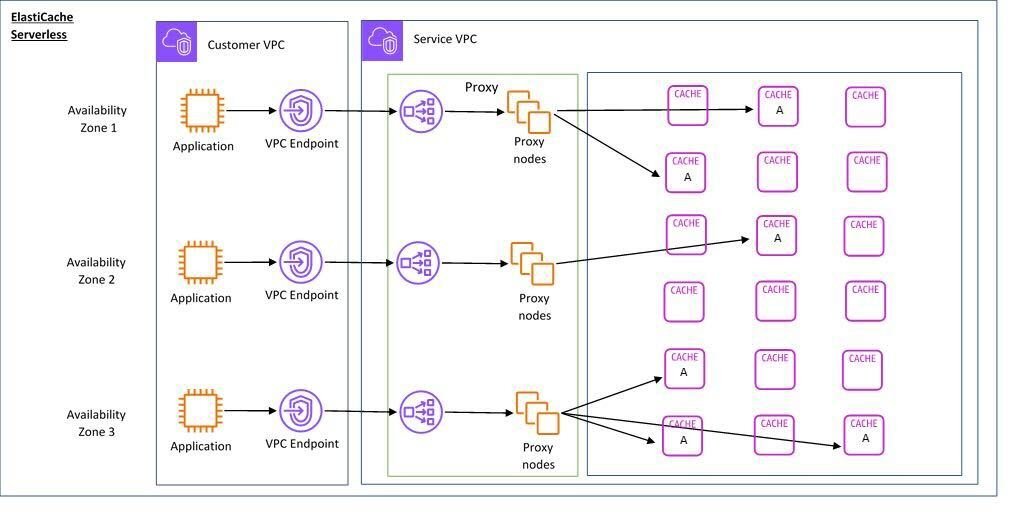

With Serverless, you can scale independently of these resources. So, in Amazon, there's a Proxy Server that routes all traffic to various distributed servers on which this Elastic Cache Engine is running. It could be either Redis or Memcached.

Q: Can you please tell us about the advantages of Serverless caching and its general value?

A: Yes, there are many advantages. One of the most significant benefits is that it increases the availability of services and your application. When one request you made falls off, not the whole node, and your service stops working, that's much better. As Amazon guarantees, your availability is 99.99%, which literally means downtime - there might be two minutes in a year. That's a tremendous advantage, especially the serverless solution, that your service, this Elastic Cache, will continue to work continuously, regardless of whether a node falls.

Moreover, you use as many resources as you need. So, if you buy resources in reserve on less allocated nodes, you have enough for minimal scaling; in this case, you don't need to overpay for additional nodes. And the main thing is scaling, as it were, for which Serverless exists.

Q: Super. Thanks to this tool, we can achieve 99.9% uptime and easier service management. How do I understand whether I need to use a serverless solution or not?

A: You need to know your workload, i.e., how loaded your Redis or Memcached will be. If you have significant spikes, i.e., it's unstable, then you definitely need to use ElastiCache Serverless. But if it's stable and literally uses a clear amount of resources every day, there's no need for a Serverless solution. Economic efficiency, well, as I said, it can scale and thus save you money. In short, we only pay for what we use, not just for resources we don't use.

Q: Okay, that’s clear. In your opinion, are there any limitations or points developers should know about before starting to work with this service in an existing architecture?

A: Well, there can be several problems. The fact that an application may have an outdated version of the libraries means that it simply will not be able to use a more recent version of Elastic Cache. The Elastic Cache server allows you to use it only from version 7.1 and higher. Also, this library must be able to work with encryption. If the application cannot work with version 7.1 plus or cannot do encryption, then it means you need to update your app to use this service. That’s why you must always follow the trends and try to update in time to use such new services and resources.

Let our team simplify the integration process for you, ensuring a smooth transition and optimal performance.

Q: Thanks for your insight! Now, let's discuss an interesting question regarding pricing and managing expenses for this service. What is the general pricing model for Elastic Cache Serverless?

A: We usually have to pay for the resources and traffic we use. That is, like any service, you pay for what you use. In this case, Amazon's pricing works the same way. It makes several requests every minute to get data on how many gigabytes of memory you're using. It takes a median and average value from these results and makes a payment. For one gigabyte, if I'm not mistaken, it's about 15 cents per hour (but the price may change occasionally). That's for memory and one CPU, a million units, 0.04 cents. It's more important for memory here because Redis is an in-memory database specifically serving this use case.

Q: What advice can you give for effectively managing and using this service?

A: I think there is no need to save valuable data in Elastic Cache, the data that you are afraid of losing. Well, for example. Elastic Cache could be used for service in your application, which is responsible for a bucket in the store. This is a good use case. Your users can add goods to the bucket, and we can store those users' items in Redis. As for reducing expenses, you can rotate this cache. You can create such functionality, which will delete this element that is no longer used and not store it in this database. For example, if the client didn’t make a purchase in a day or week, you can clear those items from memory. So, it’s memory, and it does not access the hard drive, only RAM. It will only work while your app service uses the RAM itself.

Q: Thank you. Pricing and expenses are clear. But let's talk about security. What security features does Elastic Cache Serverless offer in general?

A: It allows you to use encryption. That is, you encrypt your data inside this database, which cannot be intercepted by anyone else inside or during data transmission. Cross-scripting and some domain substitutions cannot affect the loss of these data from the database. Only somehow return these data from the client, but you must log in under another user, which is almost impossible.

Q: And can you share best practices for using this service?

A: Here, you need to understand whether you need it at all. If your workload is unstable, or you are testing some new features, if you have a startup, the best practice would be to use it through a managed service. Just because of cost efficiency. If you have predictable high spicks in workload and you need high availability for this service, then choose a serverless solution.

Also, best practices here: You must use infrastructure as a code approach. Use Terraform/Terragrunt or CloudFormation from Amazon. It is advisable to keep the entire infrastructure in code so you can conveniently manage and configure it.

Q: I came across the news that, from March 20th, Redis has switched to a dual-licensing model. Ivan, what do you think this can cause, what does it mean, and how can it affect projects?

A: This license indicates that there will be some prohibitions in the following versions of Redis. I don't know about Memcached, but definitely Redis. One of these restrictions is that third-party services will no longer be able to use the Redis engine for free, as was the case before. That is, you will need to negotiate with the Redis corporation and pay them some money so you can use their engine in your service. This is quite sad news for most people who use Redis because they need to manage decisions to move to self-hosted, which will increase operational costs. Dual licensing indicates that this will affect Cloud solutions, too. I don't think you won't be able to use Redis anymore, and I believe enthusiasts will make a fork from the existing version and continue to develop it. Cloud providers, such as Amazon, Azure, and GCP, will be able to use it as open-source as they were before. If I'm correct, something similar happened with Elasticsearch. Elasticsearch also released its updated licensing model, which prohibits using it in third-party services, so enthusiasts made the fork, which has open-source code and can be used anywhere.

Q: So, in your opinion, this is quite significant news, and I think we will be able to see the results in some time and, as you say, maybe we will have some new opportunities, new services that will replace what is now possibly inaccessible.

A: Yes, there are many alternatives to Redis, and I don't think replacing it with another service will be a problem. We just need to refactor a large number of applications, which will not be very convenient and will be costly for other application owners.

Q: Based on your experience, please tell us what impressed you the most about this new products from Amazon ElastiCache. Maybe you've identified something interesting for yourself?

A: What surprised me the most is that we can scale twice from existing resources. That is, if you have a load of 2 GB, in 10 minutes, you can already have 4. For me, this is very fast scaling, and how Amazon implemented it was quite interesting. As I said, they made a proxy between many engines and have their proxy controllers, which allow routing these requests to the corresponding free nodes located inside some proxy fleet and inside Amazon's VPC.

Q: What advice could you give to teams considering the possibility of transitioning to and using ElastiCash Serverless specifically?

A: If you have the opportunity and want to experiment or are interested, I recommend using ElastiCash for such things as sessions, specifically for caching. As I said, for storing things in an online store in a basket for users, if one user logs in, then after some time, he has to log out, and you can keep this session in ElastiCash. This service can also be used for messages. That is, there is some fleet of messages, that is, a list of messages, and you need to store them somehow. This can be done just through a list in Redux, for example. One of the interesting things is that you can use rate-limiting, that is, you use an int-parameter, through which you can check how many requests or how many queries, for example, to the same basket were made, and thus, if you see that a user made 1000 requests in 5 minutes, this is some abnormal, anomalous activity, and you need to solve them somehow. Thus, we can track such moments and respond to them quickly. In general, you can try it in some test environment and see if it will help you reduce the operational load on your, for example, developers or DevOps, or those who deal with server management and encourage savings. Well, this is always the most important for business – to save time and money.

Interviewer: Super! Ivan, thank you very much for your time and meaningful answers. I hope that it was useful for many to hear this and that it gave you an opportunity to better understand this tool and decide whether it is effective for you or not. Thank you for being with us. Bye, and see you at the new meetings!

Discover how our services can benefit your business. Leave your contact information and our team will reach out to provide you with detailed information tailored to your specific needs. Take the next step towards achieving your business goals.

How AI and DevOps are transforming software development, addressing new challenges in autonomy and ethics

Achieving 99.9% uptime involves redundancy, continuous monitoring, and robust CI/CD processes to optimize application performance and ensure security

The article discusses overcoming challenges in cloud infrastructure development for a FinTech startup with limited regional options and strict regulations