Talking about monitoring

Monitoring is the main thing that system administrator has. Admins need monitoring and monitoring needs admins!

The monitoring paradigm itself has changed over the past few years. A new era has already come, and if you monitor the infrastructure as just set of servers nowadays - you do not monitor almost anything.

Furthermore, the "infrastructure" termin implies to multi-level architecture, and each level has to have its own tools for monitoring.

In addition to problems such as "the server has crashed", "you need to replace the disk", you need to catch the problems that occur on the application and business levels: "the interaction with this microservice has slowed down", "there are not enough messages in the queue at the current time", "the queries and requests execution time in the application has grown", etc.

Experience

Our team is currently managing around 5000 servers in an enormous variety of configurations: starting from a single dedicated server and ending with large projects consisting of hundreds of hosts in Kubernetes. And for all of this, we need to follow it somehow. We need to understand and catch that something has broken right in time and quickly repair it. To do this, we need to understand what is monitoring itself and how it is built in modern realities. How to correctly design it, and what should it do. So I'd like to talk about this.

In this article I would like to describe ITsyndicate’s vision of monitoring in general and what should it be set up and configured for.

As it was before

Ten years ago monitoring was way easier than it is now. However, the applications were simpler too.

Mainly just system indicators were monitored: CPU, memory, disks, and network. All of that was quite enough because there was one application running on PHP, and nothing else was used. The problem is that based on these metrics system administrator will have very quite a few things to say. Either it works or it does not. It's really difficult to understand what exactly happens with the application itself and what caused it to go down.

If the problem is on the application level (not just "the site does not work", but "the site works, but something is wrong"), and the client reported that there is an issue - we have to start an investigation, because we ourselves could not notice such problems with basic system indicators & metrics.

Nowadays

Now the systems are completely different: with scaling, microservices, containerization approaches, etc. The systems became dynamic. Often no one really knows how exactly everything is working, how many servers the system use, and what was the deployment solution. The project lives its own life. Sometimes it is not even clear what services start where and when (like in Kubernetes, for example).

The complication of the systems themselves, of course, entailed a greater number of possible problems. Application metrics appeared like the number of running threads in Java application, the frequency of garbage collector pauses, the number of events in the queue, etc. It is very important to monitor the scaling of the systems. Let's say you have Kubernetes HPA: it is necessary to understand how many pods are running and make sure that all metrics go to the application monitoring system from all of them.

If you set up proper monitoring that will cover not only basic system and server metrics, but also collect application metrics and take care of some custom system checks the problems will become more obvious and easy to track.

Conventionally, we can divide problems into two large groups:

- the basic, "user functionality" does not work.

- something is working, but not as it should

Nowadays we need to monitor not only the discrete "works / doesn’t work", but also cover much more gradations. This will allow you to catch a problem before the application crashes.

In addition - you need to follow business indicators too. The business requires to have money graphs: how often does a customer make a new order? How much time has passed since the last order, and so on - that is also a monitoring task.

True and badass monitoring

General project engineering

The idea of what exactly needs monitoring should be laid down at the time of application and architecture development. It's not even so much about the server architecture as more about the architecture of the application as a whole.

Developers and architects should understand what parts of the system are critical for the project & business operation. So they should think that their workability status needs checks in advance.

Monitoring should be convenient for the system administrator and give a vision of what is happening. The purpose of monitoring is to receive an alert in time to quickly understand what exactly is happening and what exactly it is necessary to repair by the graphs, numbers, and states (OK, WARNING, CRITICAL for example)

Monitoring metrics

You need to understand exactly what is normal and what is not: there must be sufficient historical information about the system’s state. The task is to cover all possible anomalies with according alerts.

When a problem arises - I really want to understand what caused it. When you receive an alert that your application does not work - you really would like to know what other related parts of the system are behaving in the wrong way, and what other anomalies are there. There should be clear graphs collected in dashboards, from which you will immediately see where the problem hides.

Monitoring notifications (alerts)

Alerts should be as clear as possible: the administrator must understand what this alert is about, what documentation refers to, or at least who to call, even if he/she is not familiar with the system. There should definitely be clear instructions on what to do and how to solve the problem.

There probably should be instructions on how to react and they must be updated regularly. If everything works through the orchestration system including all changes that are deployed through it - then, probably, everything should work fine. The orchestration system allows to check the relevance of monitoring adequately.

Monitoring should expand after each alert - if suddenly there was a problem that was skipped by the monitoring tool, you need to fix this situation so next time the problem will be not sudden for you and your team.

Monitoring of the monitoring :)

Above all, you need to know that your system monitoring itself is working. There must be some external custom script that checks if the monitoring system is working properly. No one wants to wake up from the call because your monitoring system has fallen along with the entire data center and nobody told you about it.

Basic Tools

In modern scaling systems you probably have Prometheus configured because there are no analogs that will provide the same detailed metrics. In order to view convenient graphs from Prometheus, you need Grafana, because Prometheus graphs are so-so.

We also need some kind of APM (Application Performance Monitoring). Either this is a self-written system on Open Trace, or jaeger or something like that. But this is rarely done. Basically, either New Relic or specific systems for stacks, such as Dripstat, are used. If you have more than one monitoring system, not just plain Zabbix, you still need to understand how to collect these metrics, and how to distribute alerts; who to notify, who to raise, in what order, what does an alert apply to, and what to do with all of it. Now in order.

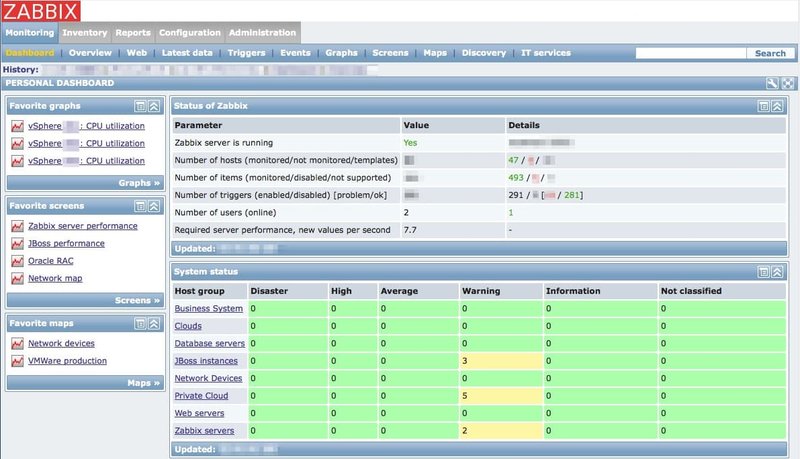

Zabbix

Zabbix - is not the most convenient system anymore. There are some issues with custom metrics, especially if the system is scaling and you need to define roles. Despite the fact that you can build very custom graphs, alerts, and dashboards, all of that is not very convenient and dynamic. This is a static monitoring system.

Prometheus + Grafana

Prometheus is an excellent solution for assembling a huge number of metrics. It has pretty similar capabilities for custom alerts as Zabbix does. You can display graphs and build alerts for any wild combinations of several parameters. And it's all very cool, but it's very inconvenient to watch, so Grafana is added to it like a visualization tool.

Grafana is beautiful. But it does not really help to monitor systems, but it provides extensive abilities to read everything. There are no better graphs, probably.

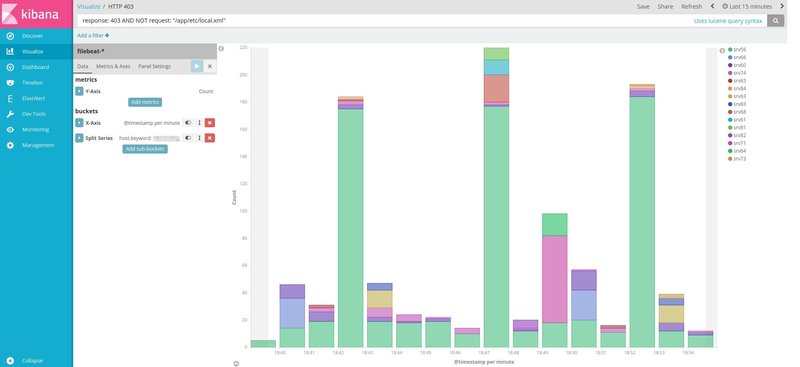

ELK + Graylog

ELK and Graylog are used to collect logs of the events in the application. Сertainly, it can be useful for developers, but for detailed analytics, it is usually not enough.

NewRelic

New Relic - APM which is very useful for developers. It gives an opportunity to understand if something is wrong with your application right now. It is clear which of the external services isn't working very well, which of the databases is responding slowly or what system interaction is working incorrectly.

Conclusion

Firstly, you should know what important indicators need monitoring when the system is designing, know what parts of the system are critical for its work and how to test them in advance.

Moreover, there should not be too many alerts and they should be relevant and clearly display what has broken down and pointed to the way how to fix it.

In conclusion, to properly monitor business indicators - you need to understand how the business processes are arranged, what your analysts need, whether there are enough tools to measure the required indicators, and how quickly you can find out if something goes wrong.